Posted on 26th Mar, 2021 in Work Life

There's a story that has been rattling around in the back of my mind for nearly 2 years now. It is not a particularly pleasant or exciting tale. However, the events which unfolded over the course of weeks would go on to shape how I view my job and my career. So without further ado I present the opening chapter of the "Servergate" series. Much like my Suit Up Japan series, this is a tale of mismanagement and the ensuing woe. Yet it's different in that it's set in a professional environment that has chosen to forsake our protagonists.

Triage

Have you ever woken up knowing that today was going to be a bad day?

For those who don't know me, I hate mornings. In my opinion they're the worst and nobody should be allowed to conduct business in any single digit AM hour. That, in part, is probably why I had been at my current job for 8 years. I was a software developer at a local university so I had been spoiled. Certainly not by the pay (definitely not by the pay), but by quality of life. We work 35 hours weeks and I had a choice of my start time (between 8 AM and 10 AM). Naturally I choose 10 AM since I'm allergic to mornings. This gives me the luxury of waking up around 8:30 and missing the brunt of rush hour.

However, it was half past 6 on the morning of May 9, 2019 and I was already awake for some peculiar reason. As I groggily fumbled in the dark for my phone to check the time, I noticed I had 3 missed calls and a dreaded "[Redacted Software A] Not Responding" email waiting for me. Reluctantly I got out of bed and headed for my computer. If history was anything to go by, I just needed to log in and reboot a web server and go back to bed. If I fixed it quickly enough, maybe I'd get to come in late to make up for having to exist at such an unholy hour.

The first emails from our monitoring systems would start arriving around 7:37 AM. By that point I was ignoring a growing number of emails that were flooding in to my inbox. I was now banging my head against my keyboard trying to figure out why I couldn't reach the server I thought I needed to reboot. The web server was actually a virtual machine, running on a cluster of servers in our on-premises data center. Normally I can just remote in to it, but failing that I should be able to access it from the management console. At 7:41 AM one of our toughest clients sent a terse reply to the "[Redacted Software A] Not Responding" email chain. "FYI. [Redacted Software B] is also down."

By the time 8 AM had rolled around, I was wide awake and nearing a panic. In the last hour, I had determined that I couldn't reach the management console nor a growing number of our systems. Through some clever lateral movements I managed to get access to some of our management resources. I was beginning to suspect major networking issues. However, if that were the case there's no way I could fix those remotely. Fortunately, the first shift of developers were turning up to their desks by this time. So we decided to tag team the problem. Guile was first in to the office, so I dispatched him to the server room, to get some eyes on the situation and hopefully prevent me from having to go down there myself. Unfortunately this wouldn't be enough to save me the trip. At 9:18 AM I cancelled my morning meetings and got on the train heading towards the server room and my demise.

Antemortem

In order to understand the gravity of the predicament I found myself in, we must first go back in time. When I first started this job, my department consisted of only 5 people: 2 developers (Eagle and Ken), 1 sysadmin (Richard), our boss (M. Bison), and myself. Both our office space and our data center were in the subbasement of a landmarked building that was famous for being a deathtrap during the Industrial Age. If you've ever seen the The IT Crowd, it was basically that.

Over the years we moved from stacks of single purpose servers to a virtualized environment. We would eventually lose Richard (and that's a whole story in itself). Since M. Bison refused to backfill his position, the senior-most developers wound up taking on his duties. I had been around long enough at this point to see Eagle leave over differences with M. Bison and Ken be promoted to the top technical position in our department. That also meant that I was now the second-most senior person on the team and would inherit the majority of Richard's duties. I had been there for the virtualization and played an integral role in building the 2nd incarnation of our virtual environment with Ken and our new software architect Ryu. However, I was by no means a suitable replacement for a systems administrator. At heart and on paper, I was a software engineer who coincidentally was intimately familiar with this data center.

In the same amount of time, our team grew from 5 people to over 70 (when you include student employees). Naturally, after years of lobbying, our department moved out of that space to a place with sunlight a little further uptown. The server room would remain where it originally was. The distance between us and the servers combined with the population growth of our team meant that now only a handful of people had any knowledge of our infrastructure and its secrets. With a handful of exceptions, most people only saw our servers once every couple of months when their turn in the weekly offsite tape backup rotation came.

Emergency Services Have Been Dispatched

As I boarded the crowded morning train, my phone continued to excitedly buzz with incoming messages. I used the radio silence between stations to catch up on what I had previously missed. By this point my students were messaging me about a presentation that I was originally planning to attend with them but now had to cancel on. In a new email thread titled "Re: Unable to Connect to [Redacted System B]", the Support Team and our boss were trying to figure out how to manage an influx of confused and angry users who start their days at 9 AM. Hiding in the rubble of my inbox, which was now being periodically shelled by VMWare alarm emails, was an email from M. Bison stating that they'd be working remotely today because they were run over by a cyclist the night before.

As the subway lurched towards my final destination, the scope of the situation began to dawn on me. More systems were becoming unavailable and the executives were breathing down our necks. Furthermore, aside from myself, all of the senior-most members of our team were away in Washington state at a conference. They were likely still sound asleep in their hotel beds.

It was half past 10 before I made it in to the office. By this point, individual developers had begun messaging me that their development environments (conveniently hosted as VMs in our data center) were down and so was our code repository. I took a minute to grab my laptop and a notebook from my desk and then immediately proceeded to kidnap the people I saw for an expedition to the server room.

Along for the ride were Guile who had already visited the servers once today, Cammy who wanted some adventure, Axl who was the newest full-time member of the team, and a student named Dan who had never seen our servers before.

At this point I was certain our issues were still being caused by the network. I suspected one of the core switches of our internal network had failed and I intended to prove it. We all gathered in to the server room and began investigating. First we checked that all of our hardware was receiving power and all the cables were connected properly. In the past we had problems with unauthorized people gaining access to the server room. Sometimes this lead to them becoming ensnared on some loose cabling and causing an outage.

No dice.

Everything looked normal so far. So I decided to test my theory and check out the switches which turned out to be fine. As I ran through the possibilities of what could be wrong, I had Axl handling communications with the outside world. Concurrently, M. Bison was directing the support team to schedule a noon meeting with us developers to figure out how our monitoring notifications work, who gets them, and if we're meeting our SLAs. Let's put aside the wastefulness of holding such a meeting mid-crisis and address the elephant in the room. We're software developers. You're lucky any one of us knows anything about running infrastructure. We're in the middle of incident response and you want a primer on something trivial like that this very instant???

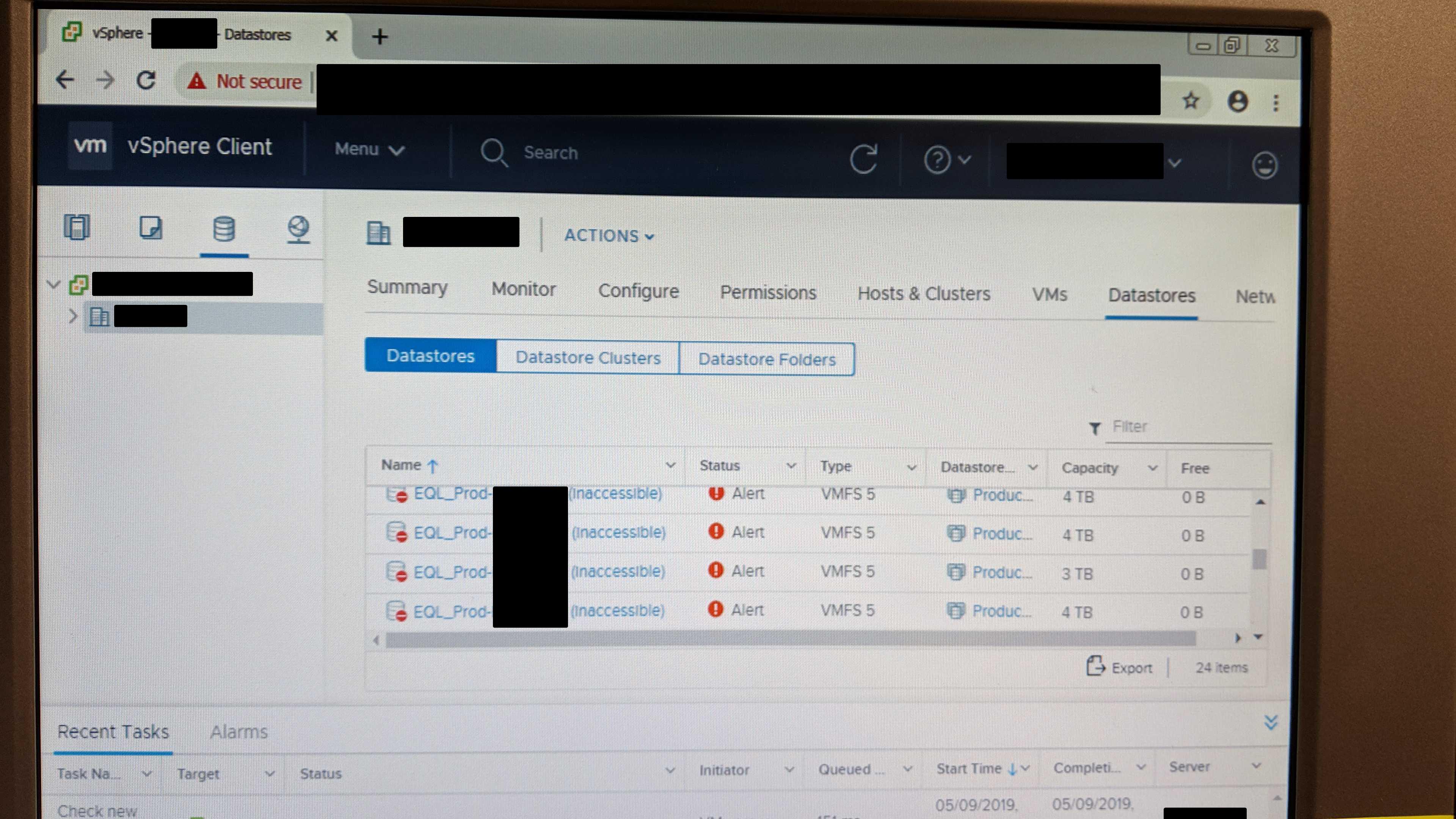

By 11:30 ET, everyone in Washington was awake (probably thanks to a complimentary wake up call from our boss). Ken and Ryu began messaging me to find out what's going on just as we had discovered the true cause of our problems… our datastores were inaccessible. Why?

Tetraphobia

There's a common superstition in many East Asian countries that the number 4 is bad luck. The reasoning behind it is that the word for "4" sounds either similar to or exactly like the word for "death" in a number of languages. So it's not unusual for the more superstitious to avoid the number number 4. Yet, we failed to heed superstition and before we knew it death had come for our storage array and took 4 drives with it.

Our virtual environment was backed by 3 SANs, 2 older Equilogic ones and 1 newer Compellent one. Both of the older ones were well out of warranty and one of them had a failing NIC. Fortunately, enterprise storage comes with dual network adapters just in case. However, the other older unit was the one that suffered all of the drive failures. Even at RAID 50, when 4 out of 24 disks fail, so does your array. (Don't worry, how it came to this will be explained in the next installment of this series.)

By this point it was already well past noon. Cammy had snuck out of the server room to bring everyone some bubble tea. We took the tea, the damaged disks, and some diagnostic data from the SANs back with us to the main office. The pointless noon meeting had now been cancelled and replaced by a situation briefing and a call to Dell support. The still of the warm spring afternoon air as we walked back to our impending doom would be the last calm we'd see for weeks.

On-scene Commander

As the senior most member of the team on site and one of the people who built the current iteration of our infrastructure, I was effectively in charge during a perfect storm. I had dealt with minor outages on my own before but this was a full blown disaster. I'd be lying if I said I wasn't absolutely terrified and overwhelmed. There was no training or protocol that prepared any of us for this so we were playing it by ear the entire time. My boss who was still at home due to their accident, could do little more than try to grease the wheels if we needed something from upper management or get in the way with outlandish demands. Unfortunately, M. Bison had a history of burning bridges and upper management really wasn't feeling very sympathetic to our plight. The members of the team in Washington were heading to the airport and wouldn't be back on solid ground until later that evening.

My marching orders were simple, do whatever it takes to get our systems back online. I had the emergency authority to run the department but still no authority within our overall organization. That meant no authority to spend money or call Dell support on my own. So my first act was less ambitious than one would expect. I commandeered a conference room for our full-time staff and sent all of the students home. The students couldn't get any work done since the development environments were down, and they also couldn't help in the work that was to come.

Once our Support Team completed the call with Dell we found ourselves in an even worse position. Not only was our hardware out of support but they no longer manufactured, sold, or even had in-stock the exact model of disk drive that was compatible with our array. I gathered all the available devs in our conference room turned war room and we scoured the internet for any supplier who had those drives in stock. We found 2 suppliers, one of which was in Queens. While the devs debated whether we could place an order and catch a train to their warehouse in order to intercept it before it shipped, the Support Team was tasked with hunting down Purchasing and getting authorization to buy the drives.

Meanwhile, M. Bison insisted that I provide hourly updates via conference call while also rebuilding the environment from scratch on the hosts that weren't backed by the failed SAN. It was a tall order. We were looking at spinning up couple of hundred production, integration, and development VMs on a newer operating system (the lost VMs were Win2k8 R2 but the VM cluster not affected by our storage failure was only licensed for Win2k16) and then deploying all the necessary software and tooling to each one. So I sent the team to grab some lunch. It was going to be a long night ahead. While they were doing that, I began transferring database backups and mapping out which systems that we needed to build out first.

The gang returned with lunch for me. We assigned everyone a system they needed to build out and I took a few moments to eat. I started getting a lot of questions almost immediately. As you might expect, a group of software developers might not have much experience with building out new environments from scratch. "How do I create a new VM?" "How do I join it to the domain?" "Do we want to do a standalone installation of the database server or add a node to an existing cluster?" It was unfair to expect everyone to have full mastery of these tasks, especially since the majority of them didn't have the necessary permissions to perform some of those actions nor any reason to have ever done so previously. Yet here we were, undergoing trial by fire.

Years of filling in for an actual sysadmin had made these tasks trivial for me, but I couldn't get anything done if I was interrupted every 10 seconds. So I decided to demonstrate how to do some of the most common tasks and we even recorded some of it so everyone could do it even if I was struck down by lightning. If only I was fortunate enough to be struck down by lightning…

The slog continued. After 7:30 PM I sent anybody remaining home. Progress was slowing to a crawl and tomorrow would be another uphill battle. Our Support Team got the emergency purchasing approval for the hard drives but the procurement officer assigned to the case chose Ground Shipping instead of the fastest option available. Ken and Ryu had landed at JFK and were heading home before walking in to hell in the morning. On the ground, we had successfully verified and restored most of the database backups. After the mandatory 8 PM briefing I decided I would head home too. I'd essentially been working since I woke up over 13 hours earlier and figured that by the time I'm home the database backups might be done restoring.

Once I got home, I had dinner and got back to work. At 12:34 AM on May 10, 2019 I called it quits for the day and sent out the last status update of the night.

Situation Briefing

AllMost systems are inaccessible due to disk failures in the storage array that backs our ███████████ Cluster of vSphere volumes. The bulk of our infrastructure and applications are still hosted there because they're running on VMs in the ██████████ Cluster or ██████ Cluster. DNS (░░░░░░░░ is our DNS server in the old environment) & user profile (the file share with user profiles also lives on the bad storage) issues that resulted from the disk problems lead me to believe it was a switch failure yesterday morning. Fortunately, it wasn't a switch failure. Unfortunately, it was a disk failure. Today's goal is to migrate what we can to our new ███████████ Cluster while we're waiting for our replacement HDDs. The VMs on the ███████████ Cluster use our new storage and are operating without problems. Conveniently our docker environments also live here. (Warning: the new environment uses significantly newer versions of citical software. IIS8, SQL Server 2014, Windows Server 2k16).A handful of dev VMs are accessible because they're on the hybrid-SAN (SSDs+HDDs) which has sustained fewer disk failures than the fully mechanical disk SANs (HDDs only). vSphere is functional but inaccessible due to DNS issues. To access it, remote in to a working dev VM or the backup and time server via its internal IP Address ░░░.░░░.░░░.░░░. Our RDP licensing server is down in this environment so only 2 sessions to each machine. Also reminder that vSphere sessions are shared so try to not step on each other's toes when logging in to stuff. Feel free to coordinate yourselves by using ██████████ as our emergency management center.

Morning Action Plan

- Bring Coffee, Tea and/or Chocolate. (Whoever arrives first is commander-on-site until me or Ken arrive.)

- Someone get on top of the HDD vendor to see if it's not too late to get those drives to us faster than "Ground" shipping.

- Someone else fix the overflowing transaction logs on new ██████ (░░░░░░░░)

- A third person needs to find if anyone has recent git repos for production systems checked out on their local machines. Since TFS is out of commission we need some source code to build & manually deploy versions of our apps.

- A Obtain the following backups, ██████, ████████████. If found, restore them to the new production db AOAG (░░░░░░░░). You'll want to google "how to restore to an always on availability group" if you've never done this before.

- Make a note for who's working on what.

Thanks for giving this a read. It's the first time I've written about my "real" job here and it turned out quite a bit longer than expected. This post is dedicated to a friend and regular reader who has been lobbying me for years to tell this tale. We once fought together in the trenches so I couldn't refuse the request when I found out he was getting a new job. The next post in this series will cover what happened once the cavalry rolled in to town and how we wound up in this unusual predicament.