Posted on 9th Apr, 2021 in Work Life

After a brutal couple of days at the office, it was finally Saturday! Saturday is a day I look forward to normally. However, this morning as I stumbled out of bed bleary eyed, I was dreading it. After the perfect storm of failures days before and the previous day's extraordinary rendition of our failing SAN, I was exhausted. There's no rest for the weary though. After breakfast, I was back at my PC trying to finish rebuild our production environments with Ken, Ryu, and Rashid.

For years our team had been lobbying to allow for remote work and for years we were told no by our boss, M. Bison. In response to this crisis though M. Bison made an exception. We had the privilege and luxury of working from home over the weekend, for free, to fix a mess that should have never occurred in the first place. I suppose I should unpack all of that before I get ahead of myself.

A Look in to the Abyss

On this relatively calm morning, while I was spending installing software on new VMs, I had some time to think. How could 4 hard drives fail simultaneously. As I caught up on some messages I thought back to before the start of this incident. My head started to spin. Everything went black as I started to feel my sanity slipping from me.

Before Servergate, the last time I visited the server room was to move some backup tapes to our offsite storage location. Since I only visit once every couple of months, I typically take a look to make sure everything is in order while I'm there. Recently they began upgrading the fire suppression system for the server room from none to a gas suppression system. The irony of not previously having a fire suppression system is not lost on me, considering that the building our datacenter was in is famous for being a deathtrap when it catches fire.

The contractors made every effort to not disturb our operations as part of the installation— just kidding! At one point they built a wooden shed around all of the server racks to protect them from falling debris. Of course, that completely prevented them from dissipating heat and the servers started sending me angry emails about how they were shutting down. When I went over there to investigate that incident, there was a visible heat haze in the room so I can't really blame the servers for complaining that it was too hot. That aside, this detail does matter to the story. Part of the work they were doing involved drilling and cutting through walls and ceilings. This created a considerable amount of vibration. Since we're in New York where earthquakes are rare, we don't build our datacenters with vibration dampening in mind.

You can probably see where I'm going with this. Prior to all this there was 1 failed drive in our SAN. Shortly after the overheating incident, 2 hard drives failed due to construction vibrations. All of this happened just a couple of months before Servergate. That was the penultimate time I was in the server room before Servergate. The next time I would be there was a few days ahead of our titular disaster. On that visit, I noticed the 3 failed hard drives were still there. The same 3 drives I had previously reported as dead months prior.

How could this happen? It's the height of incompetence to not replace hard disks as they fail.

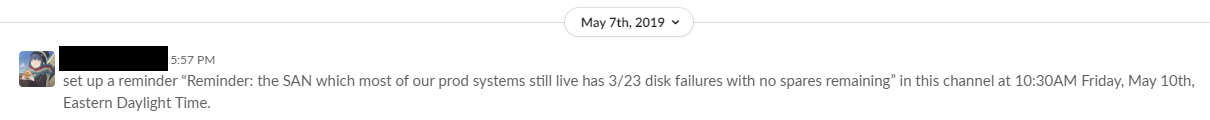

I was at once enraged and discouraged. When I considered that the hell we were now going through was preventable, that I had foreseen this possible outcome and warned of it, that I had repeatedly reported this problem to the parties most capable of remediating them to no avail… I lost the will to persist in my futile efforts to tame the chaos around me. When I noticed those dead drives were still there, not only did I raise the issue again but I had even set a Slack reminder about it. My cries had fallen on deaf ears and now we were all damned. I did my best to finish my tasks for the day and put it out of my mind for the moment.

Access Denied

At 6 PM M. Bison emailed us to confirm if Ken had received the now-pointless replacement hard drives at his house. The drives had arrived, but with our SAN now in the custody of a data recovery specialist, we wouldn't be able to install them.

The following day was more of the same, reinstalling and configuring software. The new production environment was nearly ready for testing and soon we'd be back in business. As part of our 5 PM briefing with M. Bison on Sunday evening, we decided on a plan of action for the following day. We were going to have the Support Team test our applications and if they were fit for use, alert the stakeholders.

However, in order for that to happen we needed a few things. First, we needed to find some missing files that defined frequently used reports for one of our applications. Secondly, we needed to make some networking adjustments. We had spent the day emailing back and forth with IT's Networking team to get them to let our new environment through the firewall. Although the new environment lived in the same physical space, the servers it ran on were on a different physical network than the servers that were crippled by the loss of our SAN. This resulted in another trip to the server room, all the way from home. Ken made the call that we would all be working remotely the following day and then the two of us hopped on the train to meet at the server room.

We arrived around 8 PM. Sunday evenings are well outside of the buildings normal operating hours. Naturally, the doors were locked and nobody was stationed inside the lobby. However, since people needed access to this building around the clock, they had recently installed an RFID card scanner that would unlock one of the doors. After trying each of the doors, I noticed the card scanner and gave it a try. It didn't work. Ken also gave it a try. It still didn't work. The card scanner would activate but wouldn't unlock the doors for us. After messing around trying to infiltrate the building for a bit, we emailed M. Bison for help. They advised that we should visit Security's main office and try and talk someone in to letting us in. Someone finally let us in after some back and forth between us, Security, M. Bison, and Facilities.

While we were working in the server room, M. Bison was busy firing off a flurry of emails to IT's networking team and the head of their team and the head of IT. We needed our request to be let through the firewall to be expedited. At 9:13 PM, our requests were immediately approved. That freed us up to look for those missing report files. First, we checked the tape backups but they didn't have the files we needed. So, we pulled out the old physical server the application originally ran on (before we virtualized everything) and dusted it off. After powering it on and connecting it to an old TV that was hanging on the wall, we found our files and transferred them to where they needed to be. Mission complete.

At 10 PM M. Bison sent out a final set of emails, thanking everyone who was slaving away through this horrid weekend and updating our stakeholders. The last email we received that day was titled "Taking my pain medication now" and it requested a final update on some open items before 9 AM. Servergate wasn't over but at least this week was. It'd be another 6 hours before the next email came in, but at that moment all I wished for was eternal sleep.

I guess this chapter of the tale is a little different than the previous ones. Having time for a little self-reflection, I started to understand the forces that drove us to this point. However, this isn't even close to the end. In the interest of time, I'll try to cover the entirety of the next week in the next entry. Don't hold me to that though!